经过虚拟机配置,基础环境配置完毕后,就可以进入集群搭建的环节了

[root@master ~]# yum install wget

# 切换镜像源

[root@master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

# 查看当前镜像源中支持的docker版本

[root@master ~]# yum list docker-ce --showduplicates

# 安装特定版本的docker-ce

# 必须制定--setopt=obsoletes=0,否则yum会自动安装更高版本

[root@master ~]# yum install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7 -y

# 添加一个配置文件

# Docker 在默认情况下使用Vgroup Driver为cgroupfs,而Kubernetes推荐使用systemd来替代cgroupfs,并切换到阿里仓库源

[root@master ~]# mkdir /etc/docker

[root@master ~]# cat <<EOF> /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://kn0t2bca.mirror.aliyuncs.com"]

}

EOF

# 启动dokcer

[root@master ~]# systemctl restart docker

# 查看下docker是否启动成功

[root@master ~]# docker version

Client:

Version: 18.06.3-ce

API version: 1.38

Go version: go1.10.3

Git commit: d7080c1

Built: Wed Feb 20 02:26:51 2019

OS/Arch: linux/amd64

Experimental: false

Server:

Engine:

Version: 18.06.3-ce

API version: 1.38 (minimum version 1.12)

Go version: go1.10.3

Git commit: d7080c1

Built: Wed Feb 20 02:28:17 2019

OS/Arch: linux/amd64

Experimental: false

# 设置开机自启

[root@master ~]# systemctl enable docker

# 由于kubernetes的镜像在国外,速度比较慢,这里切换成国内的镜像源

# 编辑/etc/yum.repos.d/kubernetes.repo,添加下面的配置

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgchech=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

# 安装kubeadm、kubelet和kubectl

[root@master ~]# yum install --setopt=obsoletes=0 kubeadm-1.17.4-0 kubelet-1.17.4-0 kubectl-1.17.4-0 -y

# 配置kubelet的cgroup

#编辑/etc/sysconfig/kubelet, 添加下面的配置

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

# 设置kubelet开机自启

[root@master ~]# systemctl enable kubelet

# 在安装kubernetes集群之前,必须要提前准备好集群需要的镜像,所需镜像可以通过下面命令查看

[root@master ~]# kubeadm config images list

# 下载镜像

# 此镜像kubernetes的仓库中,由于网络原因,无法连接,下面提供了一种替换方案

# 步骤1

images=(

kube-apiserver:v1.17.17

kube-controller-manager:v1.17.17

kube-scheduler:v1.17.17

kube-proxy:v1.17.17

pause:3.1

etcd:3.4.3-0

coredns:1.6.5

)

# 步骤2

for imageName in ${images[@]};do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

# 验证是否安装好

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.17.17 3ef67d180564 2 years ago 117MB

k8s.gcr.io/kube-controller-manager v1.17.17 0ddd96ecb9e5 2 years ago 161MB

k8s.gcr.io/kube-scheduler v1.17.17 d415ebbf09db 2 years ago 94.4MB

k8s.gcr.io/kube-apiserver v1.17.17 38db32e0f351 2 years ago 171MB

k8s.gcr.io/coredns 1.6.5 70f311871ae1 3 years ago 41.6MB

k8s.gcr.io/etcd 3.4.3-0 303ce5db0e90 3 years ago 288MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 5 years ago 742kB

注意: 下面的操作只需要在master节点上执行即可

# 创建集群,注意apiserver-advertise-address为当前机器ip

[root@master ~]# kubeadm init \

--apiserver-advertise-address=192.168.145.100 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.17.17 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

# 按照提示创建必要文件

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

上边的命令执行完毕后,会看到如下输出,注意记录关键信息

# 注意记录关键信息

Your Kubernetes control-plane has initialized successfully! # 安装成功

To start using your cluster, you need to run the following as a regular user:

# 还需要做的事情

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 集群安装完毕,但是网络是没有的,还需要执行下边的命令

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

# 如果想在集群里加入node节点,需要在node节点以root身份执行下方的命令

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.145.100:6443 --token ez0nsy.mwcwhlp84ubowcr1 \

--discovery-token-ca-cert-hash sha256:054584d999625b5a39f7ccf02aefa173f21926603f6ccd219fb37fa0245ea580

注意: 下面的操作只需要在node节点上执行即可,需要将上方输出的命令复制过来执行

[root@node2 ~]# kubeadm join 192.168.145.100:6443 --token ez0nsy.mwcwhlp84ubowcr1 \

> --discovery-token-ca-cert-hash sha256:054584d999625b5a39f7ccf02aefa173f21926603f6ccd219fb37fa0245ea580

查看所有节点,验证是否成功

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 10m v1.17.4

node1 NotReady <none> 69s v1.17.4

node2 NotReady <none> 4s v1.17.4

kubernetes支持多种网络插件,比如flannel,calico,canal等,任选一种即可,本次选择flannel

注意: 只在master节点操作即可

[root@master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@master ~]# kubectl apply -f kube-flannel.yml

过个一两分钟,查看节点状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 21m v1.17.4

node1 Ready <none> 12m v1.17.4

node2 Ready <none> 11m v1.17.4

全是ready即可

注意:只通过master节点操作

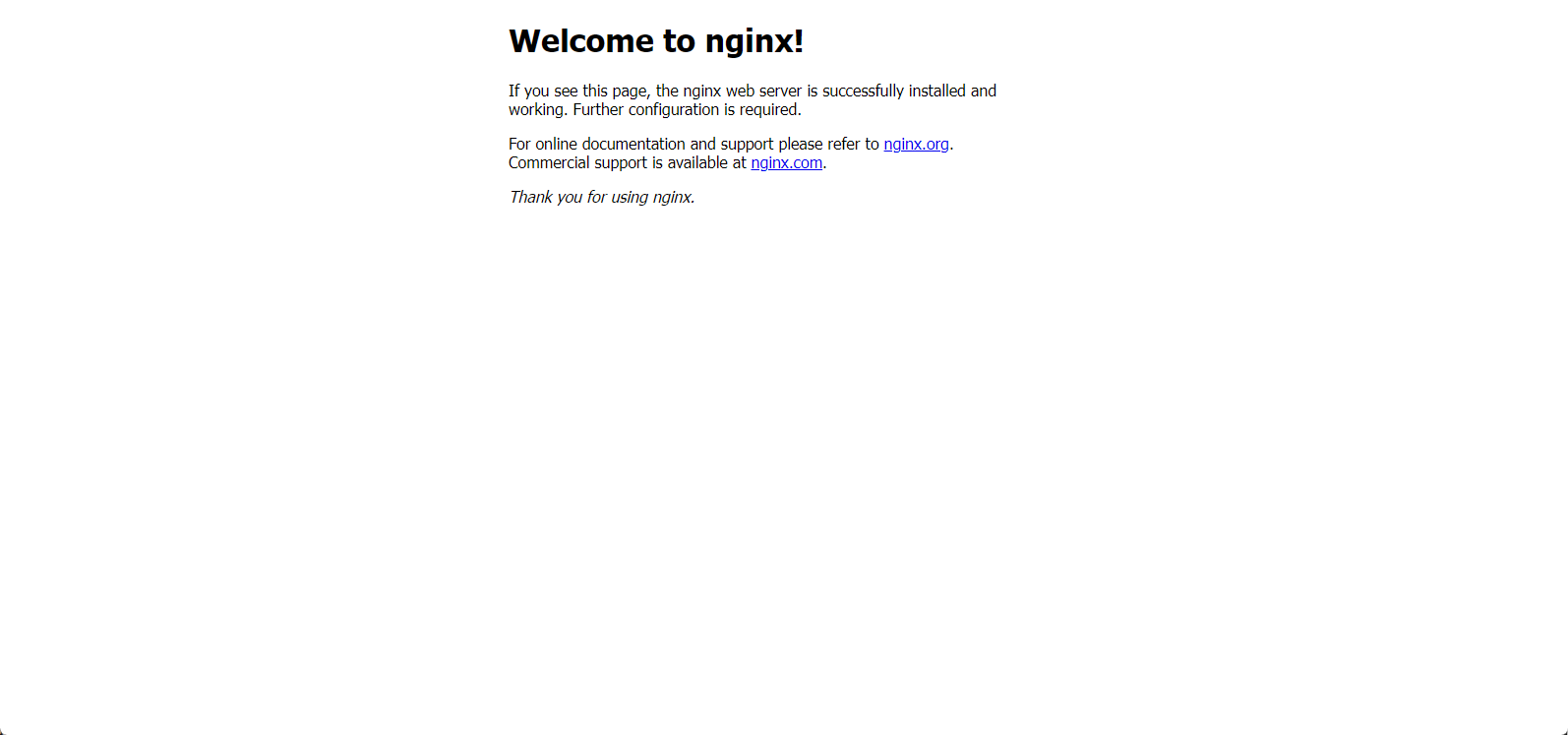

# 部署nginx

[root@master ~]# kubectl create deployment nginx --image=nginx:1.14-alpine

# 暴露端口

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

# 查看服务状态

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6867cdf567-pmj4d 1/1 Running 0 2m13s

[root@master ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 27m

nginx NodePort 10.97.243.70 <none> 80:30322/TCP 83s

# 注意关注输出的PORT(S),nginx的80端口对应的是30322端口,也就是对外暴露的30322端口

浏览器访问验证http://192.168.145.100:30322/  到这儿集群搭建成功~

到这儿集群搭建成功~